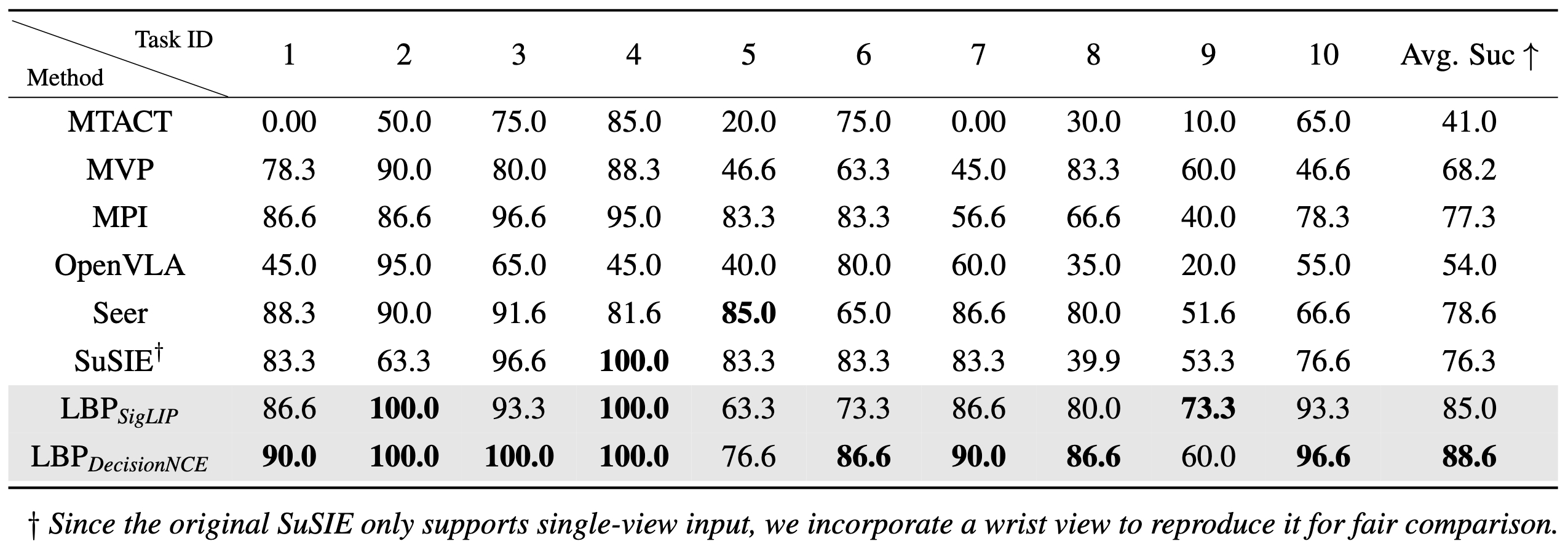

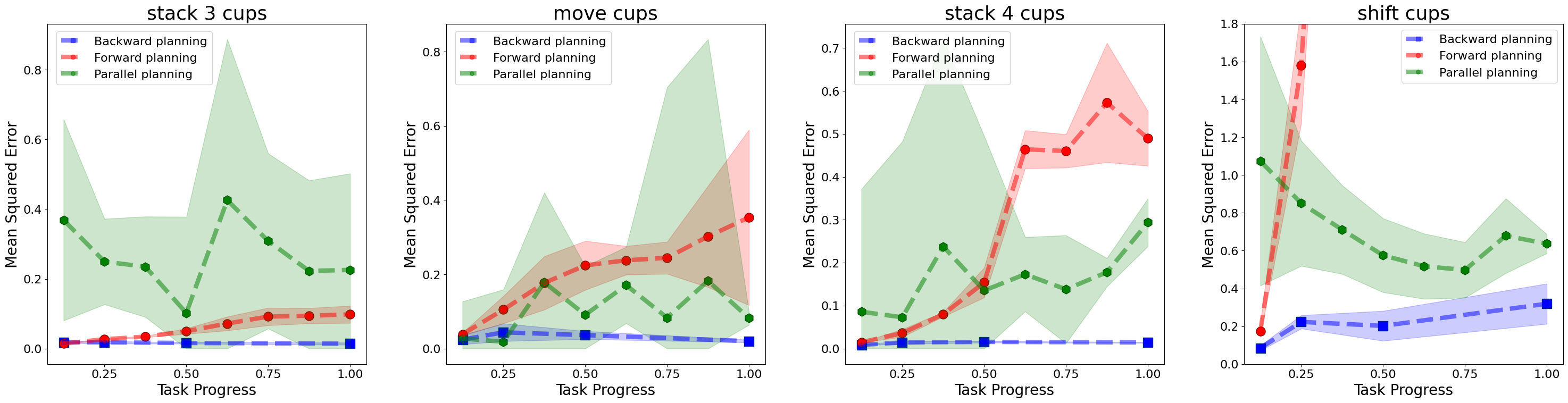

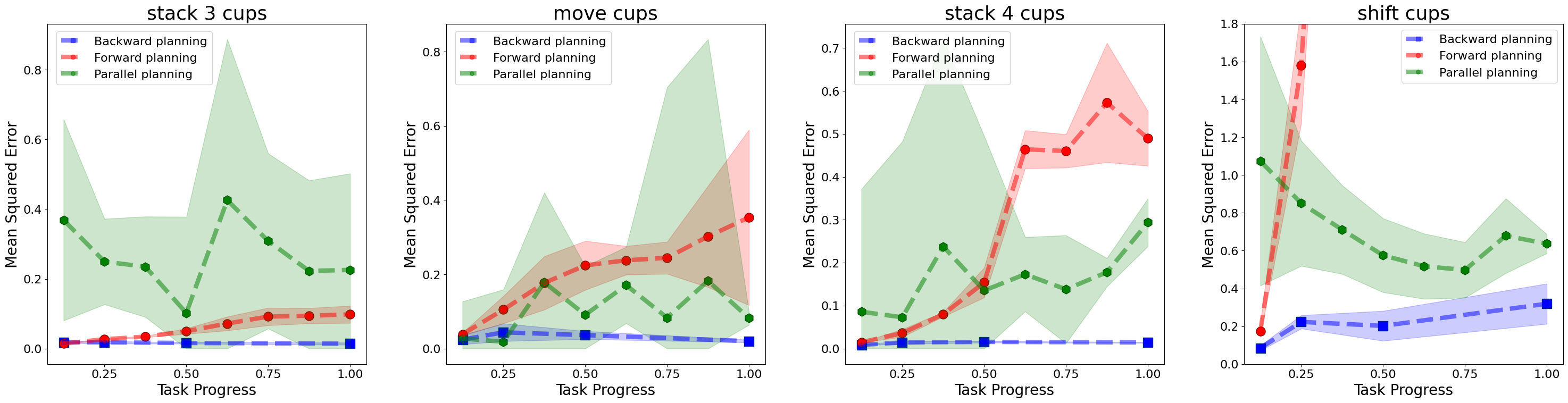

To evaluate the effectiveness of the backward planning approach, we compare it against a conventional forward planner and a parallel planner, both sharing the same hyperparameter setups to ensure a fair comparison. While the LBP model progressively predicts subgoals in a backward manner, the forward planner predicts the subgoal 10 steps into the future, and the parallel planner predicts all subgoals simultaneously. We randomly sample 3,000 data points representing the current state from our real-robot datasets and compute the mean squared error (MSE) between the predicted subgoals and their corresponding ground truths. The results are visualized below, with normalized task progress shown on the x-axis.

Figure 5. Mean Squared Errors (MSE) between predicted subgoals and corresponding ground truths in forward, parallel and backward planning (ours).

It can be observed that the compounding errors of forward planning increase rapidly across all tasks. In particular, for the most challenging task, Shift Cups, the prediction error becomes unacceptably large when forecasting distant subgoals. This issue is further exacerbated in approaches that attempt to predict continuous future image frames, where compounding errors can be even more severe.

Although parallel planning avoids error accumulation by predicting all subgoals simultaneously, it suffers from consistently inaccurate predictions across the entire planning horizon. This limitation can be attributed to the difficulty of the training objective, which requires simultaneous supervision of all subgoals. Such an approach demands greater model capacity and incurs significantly higher computational costs.

In contrast, our backward planning method maintains consistently low error across the entire planning horizon. These results highlight the advantages of our approach, which enables both efficient and accurate subgoal prediction.